The determinant is a number associated with an matrix

. It is defined as

where

-

is an element of in the first row of

.

is the cofactor associated with

.

, the minor of the element

, is the determinant of the

matrix obtained by removing the

row and

-th column of

.

In the case of , we say that the summation is a cofactor expansion along row 1. For example, the determinant of

is

For any square matrix, the cofactor expansion along any row and any column results in the same determinant, i.e.

To prove this, we begin with the proof that the cofactor expansion along any row results in the same determinant, i.e. for

. Consider a matrix

, which is obtained from

by swapping row

consecutively with rows above it

times until it resides in row 1. According to property 8 (see below), we have

According to property 10, and therefore, the cofactor expansion along any column also results in the same determinant. This concludes the proof.

In short, to calculate the determinant of an matrix, we can carry out the cofactor expansion along any row or column. If we expand along a row, we have

. We then select any row

to execute the summation. Conversely, if we expand along a column, we get

.

The following are some useful properties of determinants:

-

, where is

the identity matrix. If one of the diagonal elements of

is

, then

.

- If the elements of one of the columns of

are all zero,

.

- If

is obtained from

by multiplying the

-th row of

by

,

.

- If

is obtained from

by swapping two rows or columns of

, then

- If two rows or two columns of

are the same,

.

- The inverse of a matrix exists only if

.

- If

, then

.

- If

, then

. If

, then

.

- If

is diagonal, then

.

Proof of property 1

We shall proof this property by induction.

For ,

For ,

Let’s assume that for ,

. Then for

,

We can repeat the above induction logic to prove that if one of the diagonal elements of

is

.

Proof of property 2

Again, we shall proof this property by induction.

For ,

For ,

Let’s assume that for , we have

. Then for

,

Proof of property 3

For , where

, we have

. For

, the definition

allows us to sum by row or by column. Suppose we sum by row, we have

. Since we are allowed to choose any column

to execute the summation, we can always select the column

such that

. Therefore,

if the elements of one of the columns of are all zero.

Proof of property 4

Let’s suppose is obtained from

by multiplying the

-th row of

by

. If we expand

and

along row

, cofactor

is equal to cofactor

. Therefore,

Proof of property 5

For a type I elementary matrix, transforms

by swapping two rows of

. So,

due to property 8. Since

is obtained from

by swapping two rows of

, we have

according to property 1 and property 8, which implies that

. Therefore,

.

For a type II elementary matrix, due to property 4 and

because of property 1. So,

.

For a type III elementary matrix,

is computed by expanding along row

. The equation

means that when

is computed by expanding along row

, it has the same cofactor as when

is computed by expanding along row

. This implies that

. Since the definition of the determinant of

is

, which in our case is equivalent to

, we have

. Thus

, which according to property 9, gives:

Since ,

according to eq5 and property 1.

Comparing eq5 and eq6, .

Proof of property 6

Case 1

If is singular, where

, then

is also singular according to property 12. So,

.

Case 2

If is non-singular, it can always be expressed as a product of elementary matrices:

. So,

Since property 5 states that ,

Similarly, . Substitute this in the above equation,

.

Proof of property 7

Using property 6 and then property 2,

Proof of property 8

We shall proof this property by induction. is the trivial case, where

is the rank of a square matrix.

For , let

and

, which is obtained from

by swapping two adjacent rows. Furthermore, let

and

. Clearly,

Let’s assume that for ,

when two adjacent rows are swapped. For

, we have:

Case 1: Suppose that the first row of is not swapped when making

.

is the determinant of a rank

matrix, which is the same as

except for two adjacent rows being swapped. Therefore,

and

.

Case 2: If the first two rows of are swapped when making

,

We have and

. The minors

and

can be expressed as

where is

with the first two rows, and the

-th and

-th columns removed.

Question

Why is each of the minors expressed as two separate sums?

Answer

The minor is the determinant of a submatrix of

with the first row and the

-th column of

removed. If

, the term with the Kronecker delta disappears and

, where

is the determinant of

with the first two rows, and the

-th and 1st columns removed. If

, one of the columns between the 1st and the last columns of

is removed in forming the submatrix. Therefore, both summations are employed in determining

, with the first from

to

and the second from

to

. The two summations also apply to the case when

. Finally, the same logic can be used to explain the formula for

. You can validate the formulae of both minors using the example of:

Therefore,

For any pair of values of and

, where

, the terms in

are

, which differ from the terms in

, i.e.

, by a factor of -1. Similarly, for any pair of values of

and

, where

, the terms in

are

, which again differ from the terms in

, i.e.

, by a factor of -1. Since all terms in

differ from all corresponding terms in

by a factor of -1,

.

In general, the swapping of any two rows and

of

, where

, is equivalent to the swapping of

adjacent rows of

, with each swap changing

by a factor of -1. Therefore,

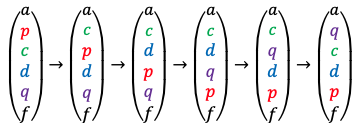

Question

How do we get ?

Answer

Firstly, we swap row consecutively with each row below it until row

is swapped, resulting in

swaps. Then, swap the previous row

, which now resides in what was row

, consecutively with each row above it until it becomes what used to be row

, resulting in

swaps. These two actions combined are equivalent to the swapping of

with

, with a total of

swaps of adjacent rows. The diagram below illustrates an example of the swaps:

Finally, the swapping of any two columns is proven in a similar way.

Proof of property 9

Consider the swapping of two equal rows of to form

, resulting in

and

. However, property 8 states that

if any two rows of are swapped. Therefore,

if two rows of

are equal. The same logic applies to proving

if there are two equal columns of

.

Proof of property 10

Case 1:

If , then

according to property 13. So,

.

Case 2:

Let’s first consider elementary matrices . A type I elementary matrix is symmetrical about its diagonal, while a type II elementary matrix has one diagonal element equal to

. Therefore,

and thus

for type I or II elementary matrices. A type III elementary matrix is an identity matrix with one of the non-diagonal elements replaced by a constant

. Therefore, if

is a type III elementary matrix, then

is also one. According to eq6,

for a type III elementary matrix. Hence,

for all elementary matrices.

Next, consider an invertible matrix , which (as proven in the previous article) can be expressed as

. Thus,

(see Q&A in the proof of property 13). According to property 5,

and

Therefore, .

Proof of property 11

We have , or in terms of matrix components:

Consider the matrix that is obtained from the matrix

by replacing the

-th column of

with the

-th column, i.e.

for

and

. According to property 9,

because

has two equal columns. Furthermore, cofactor

is equal to cofactor

for

. Therefore,

When , the last summation in eq8 becomes

Combining eq8 and eq9, we have , which when substituted in eq7 gives:

Therefore, , which implies that the inverse of a matrix is undefined if

. In other words, the inverse of a matrix

is undefined if

. We call such a matrix, a singular matrix, and a matrix with an associated inverse, a non-singular matrix.

Proof of property 12

We shall prove by contradiction. According to property 11, has no inverse if

. If

has no inverse and

has an inverse, then

. This implies that

has an inverse

, where

, which contradicts the initial assumption that

has no inverse. Therefore, if

has no inverse, then

must also have no inverse.

Proof of property 13

Question

Show that .

Answer

because

because

which can be extended to .

Using the identity in the above Q&A, . If

is invertible, then

. This implies that

is the inverse of

and therefore that

is invertible if

is invertible.

The last part shall be proven by contradiction. Suppose is singular and

is non-singular, there would be a matrix

such that

, Furthermore,

, which implies that

. This contradicts our initial assumption that

is singular. Therefore, if

is singular,

must also be singular.

Proof of property 14

We shall proof this property by induction. For ,

Let’s assume that for

. Then for

, the cofactor expansion along the first row is