Quantum key distribution is the relay of a cryptographic key, which is generated based on the principles of quantum mechanics, between two parties. In computing and telecommunications, messages are represented by binary codes, e.g. the binary code for the letter ‘m’ is 1101101, where each digit is called a binary digit or a bit. One way for a sender to encrypt a message is to generate a key of the same bit-length as the message, e.g. 0001100, and use it to perform a modular addition on the message (where numbers are not carried or borrowed):

1101101

+ 0001100

1100001

Under the American Standard Code for Information Interchange (ASCII), the encrypted message reads ‘a’ instead of ‘m’. To decode the message, the receiver simply performs a second modular addition on the encrypted message using the same key:

1100001

+ 0001100

1101101

Theoretically, one possible way of encrypting a message is to generate a random key using the principles of quantum entanglement.

Let’s suppose:

-

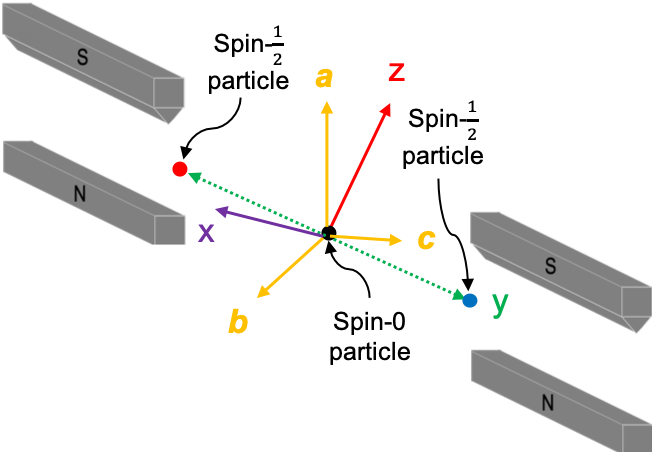

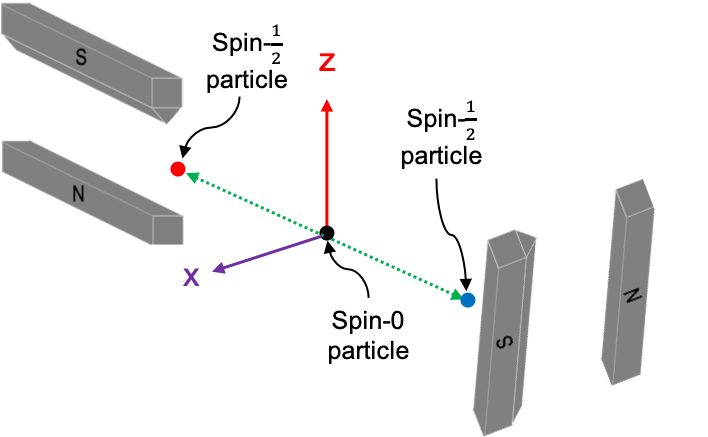

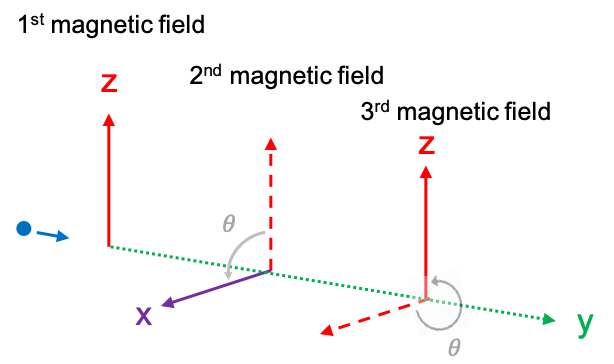

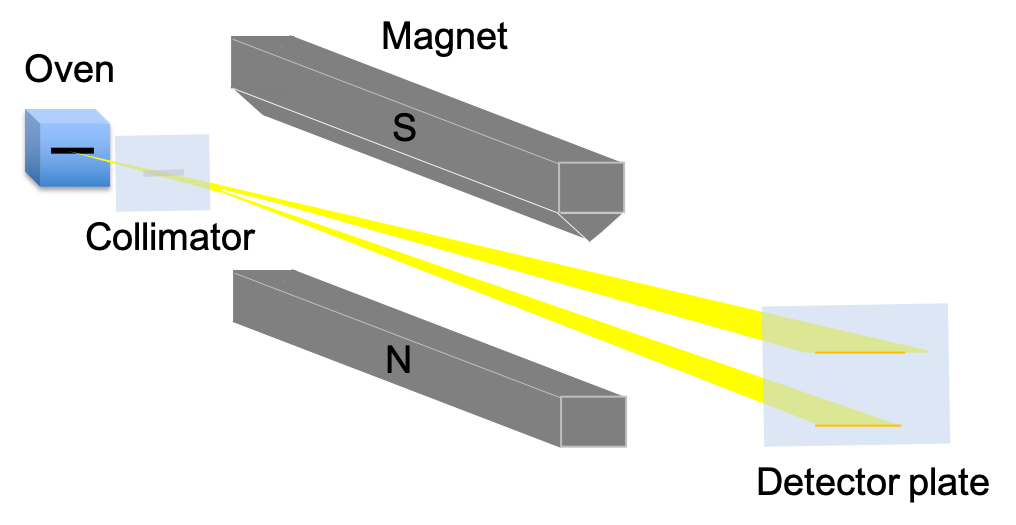

- The sender and the receiver both have Stern-Gerlach devices, which can only be oriented along the

-axis or the

-axis.

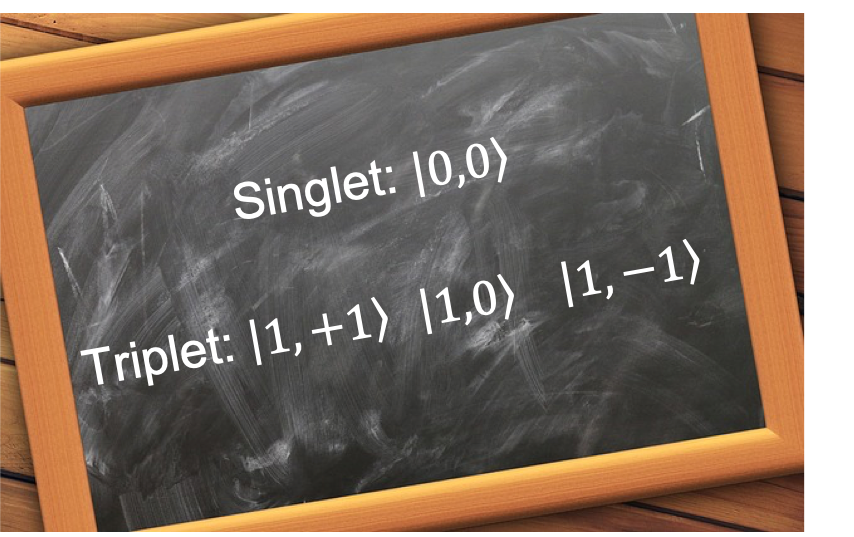

- The sender creates seven pairs of entangled spin-

For each pair of entangled particles, one particle is measured by the sender using a randomly selected axis and the other by the receiver, also using a randomly selected axis.

- The sender and the receiver both have Stern-Gerlach devices, which can only be oriented along the

We may end up with the following:

|

|

Sender | Receiver | ||

|

Axis |

Bit | Axis |

Bit |

|

|

1 |

x | 0 | x |

1 |

|

2 |

z | 1 | x |

1 |

|

3 |

z | 1 | z |

0 |

|

4 |

x | 0 | z |

0 |

|

5 |

z | 0 | x |

1 |

| 6 | x | 0 | z |

0 |

|

7 |

z | 0 | z |

1 |

where 0 and 1 denote an up-spin and a down-spin respectively. Since 0 and 1 are associated with a particle’s spin, which is a quantum mechanical property, we called them quantum bits or qubits.

To complete the protocol, both parties agree to share their axes of measurement (which can be communicated publicly) and agree to construct the key as follows:-

-

- Retain the sender’s bits for measurements made using the same axes

- Convert all other qubits to 0

They key in the above example is therefore 0010000 and the letter ‘m’ is encoded as the bracket ‘}’. If a third party intercepts every particle sent to the receiver with another Stern-Gerlach device, we may have the following:

|

Sender |

Receiver | Interceptor |

Receiver* |

|||||

|

Axis |

State | Axis | State | Axis | State | Axis |

State |

|

|

1 |

x | 0 | x | 1 | z | 0 | x |

0 |

|

2 |

z | 1 | x | 1 | x | 1 | x |

1 |

|

3 |

z | 1 | z | 0 | z | 0 | z |

0 |

|

4 |

x | 0 | z | 0 | z | 0 | z |

0 |

|

5 |

z | 0 | x | 1 | z | 1 | x |

1 |

|

6 |

x | 0 | z | 0 | x | 1 | z |

0 |

|

7 |

z | 0 | z | 1 | x | 1 | z |

0 |

Let’s suppose the interceptor then immediately sends unentangled particles of the same state as the ones he measured to the receiver (i.e. before the axes of the sender and receiver are publicly shared). The receiver’s measurements may be per ‘Receiver*’, where

-

- Receiver measures the same state as the interceptor if both use the same axes (only for 2, 3 and 4).

- Receiver may or may or measure the same state as the interceptor otherwise.

When the sender and receiver eventually share their axes of measurement and further share pre-determined parts of their measured states, they will realise, from the discrepancies in 1 and 7, that their channel of communication has been compromised, leading them to discard the key. Of course, the measurements of ‘Receiver*’ for 1 and 7 may be 1 and 1 respectively. On average, the number of times the sender and receiver choosing the same axis for a measurement but the interceptor selecting a different axis is , of which half the time the interceptor will make a different measurement versus the receiver and send the ‘wrong’ state to the receiver. Therefore, the probability of the sender and receiver detecting discrepancies in the retained states when they randomly share parts of their measured states is

(assuming the shared states are representative of a randomly selected segment), which is very significant if the qubit-length of the raw key is relatively long. For example, if the raw key has 72 qubits, of which 40 common qubits are eventually selected randomly and shared between the sender and the receiver, 5 retained qubits will be erroneous.

In short, quantum key distribution provides a way to securely encrypt a message, as it allows for the detection of an interceptor. However, it is difficult to construct an infrastructure for quantum key distribution using Stern-Gerlach devices. In practice, entangled photon pairs, photon polarisation states and optical devices are used, as exemplified by the BB84 protocol.