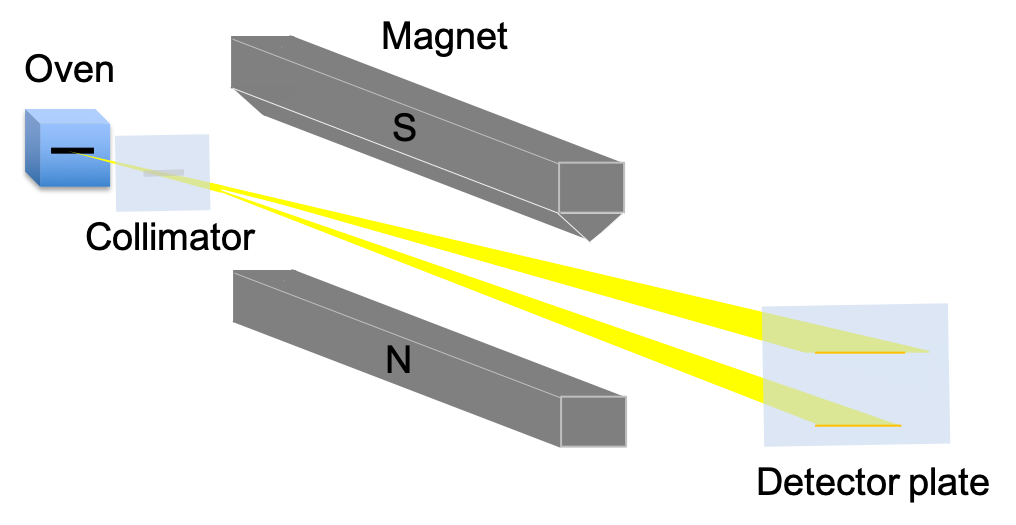

The gyromagnetic ratio of the electron can be evaluated by the following experiment:

In the above diagram, a sample of hydrogen atoms is placed in a uniform magnetic field (1 T) and irradiated with microwaves at different frequencies. When the electromagnetic source is turned off, no absorption is detected. However, as the electromagnetic source radiation is varied in the microwave range, an absorption is observed at , indicating an electronic transition between two different energy states (transition frequency for proton is in the

range).

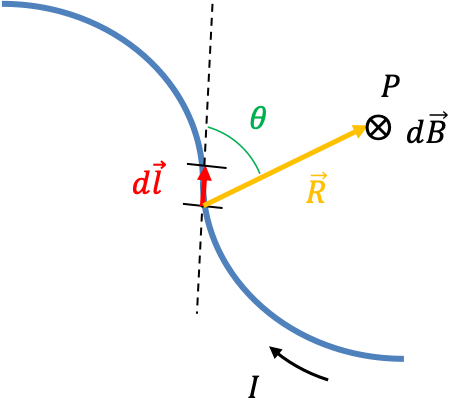

Since the electron in a hydrogen atom is in the 1s orbital (), the atom’s angular momentum is attributed to its electron spin angular momentum (the magnetic dipole moment of the nucleus is relatively weak). From eq67, the classical relation between the energy of a charged particle

in a magnetic field

and the particle’s angular momentum

is

, whose spin analogue is:

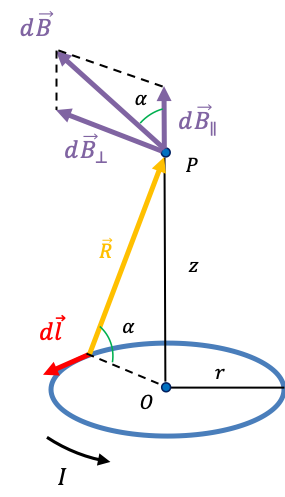

Analysing the effect of the uniform magnetic field (with magnitude ) on the energy states of hydrogen in the

-direction, the above equation becomes:

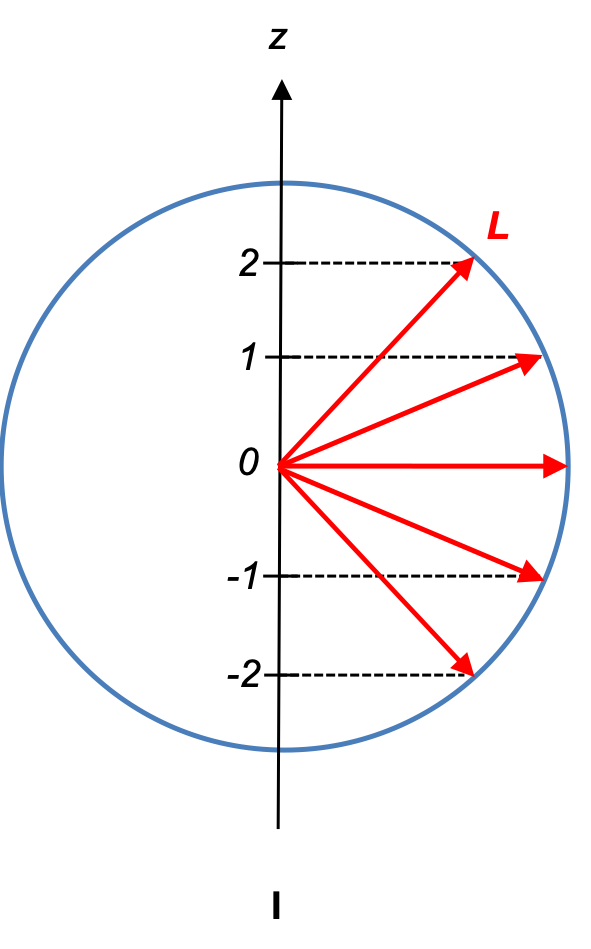

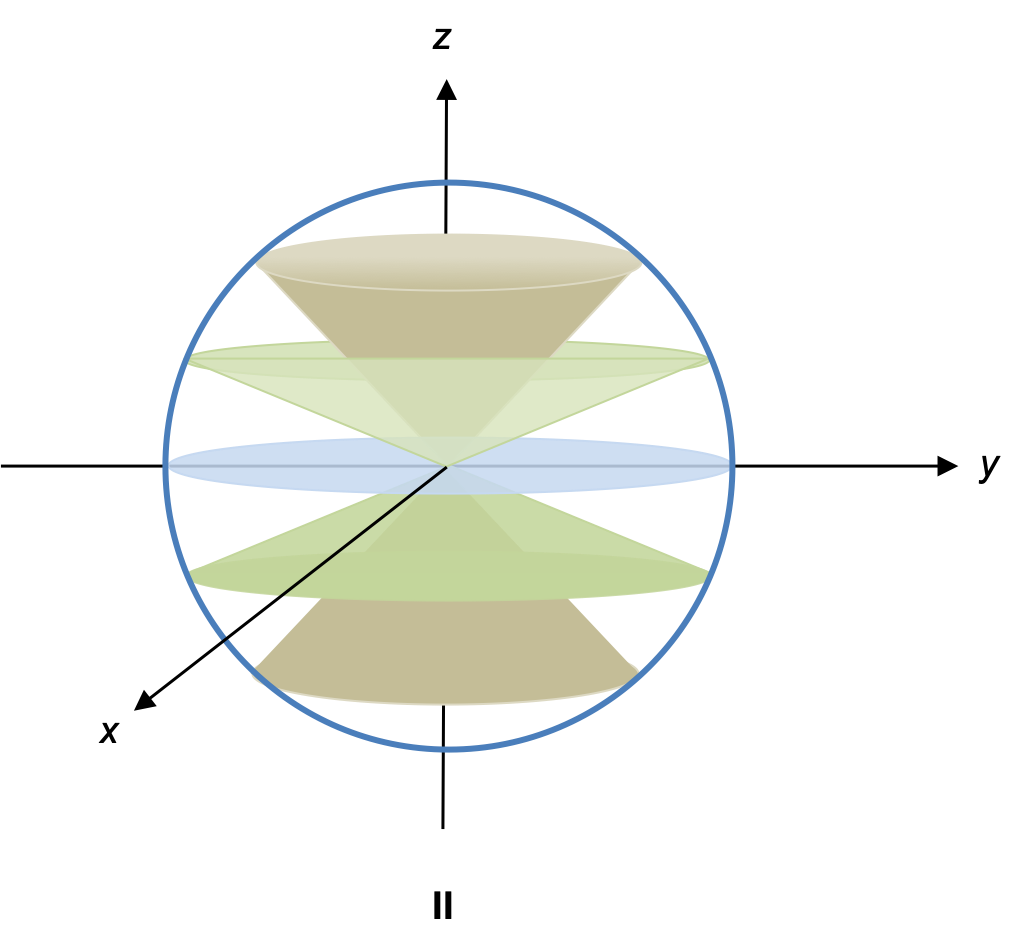

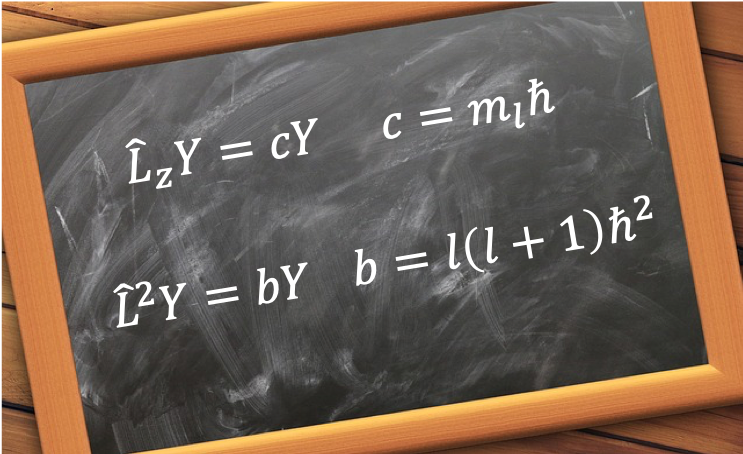

From eq75, each of the eigenvalues of is the square of the magnitude of the orbital angular momentum of an electron, which makes each of the eigenvalues of

the

-component of the magnitude of the orbital angular momentum of an electron. Since

is the analogue of

, we postulate that the eigenvalues of

is the

-component of the magnitude of the spin angular momentum of an electron, which from eq168, is

. Therefore, we have

Question

Why is the spin magnetic momentum quantum number associated with the lower energy state

?

Answer

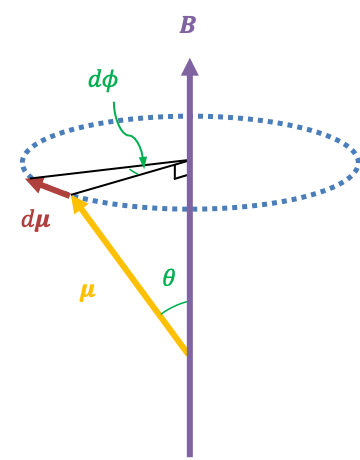

The classical gyromagnetic ratio of a charged particle is . Since the electron has a negative charge, its gyromagnetic ratio

is negative. Therefore,

if

.

If we replace with

and

with

in

, we have

. So,

is about twice the value of

. Due to this difference, the classical notion of the electron spinning on its own axis (which is equivalent to a current loop) has no physical reality. The gyromagnetic ratio of the electron is formerly defined as:

where is the g-value of the electron, which is measured in a recent experiment to be 2.00231930436256 with an uncertainty of 1.7×10-13.

This experiment also provides evidence that the spin angular momentum quantum number of an electron is . Since the sole transition is between the two spin states of the electron, and that

,