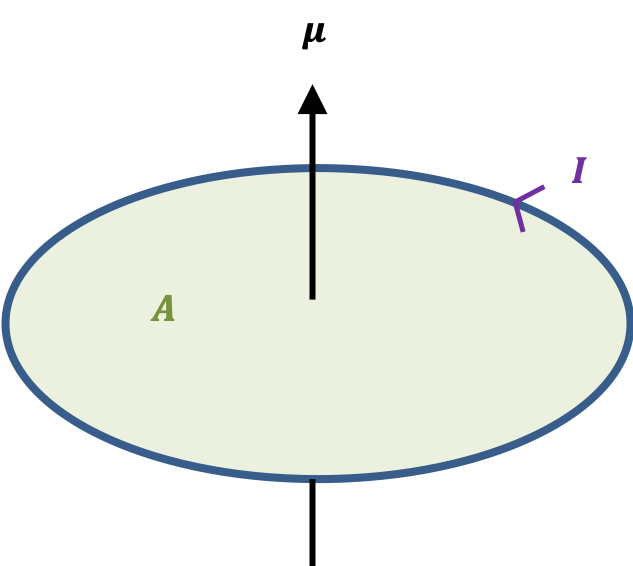

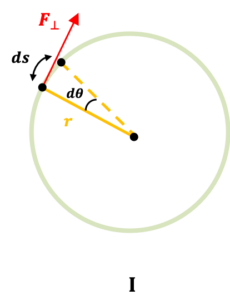

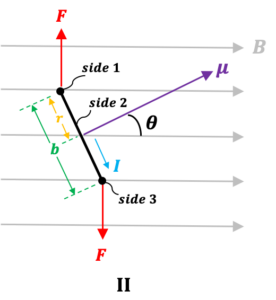

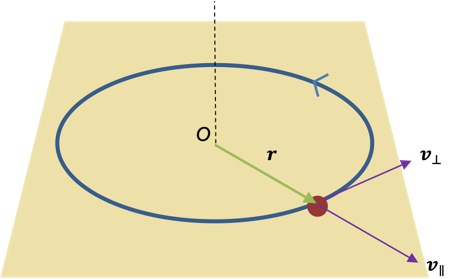

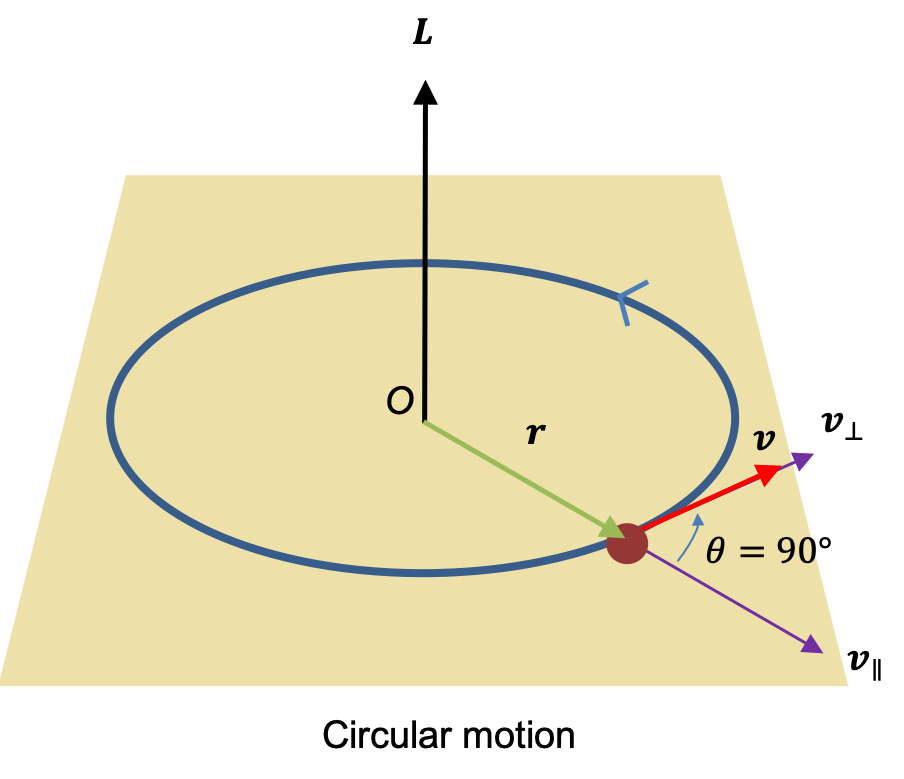

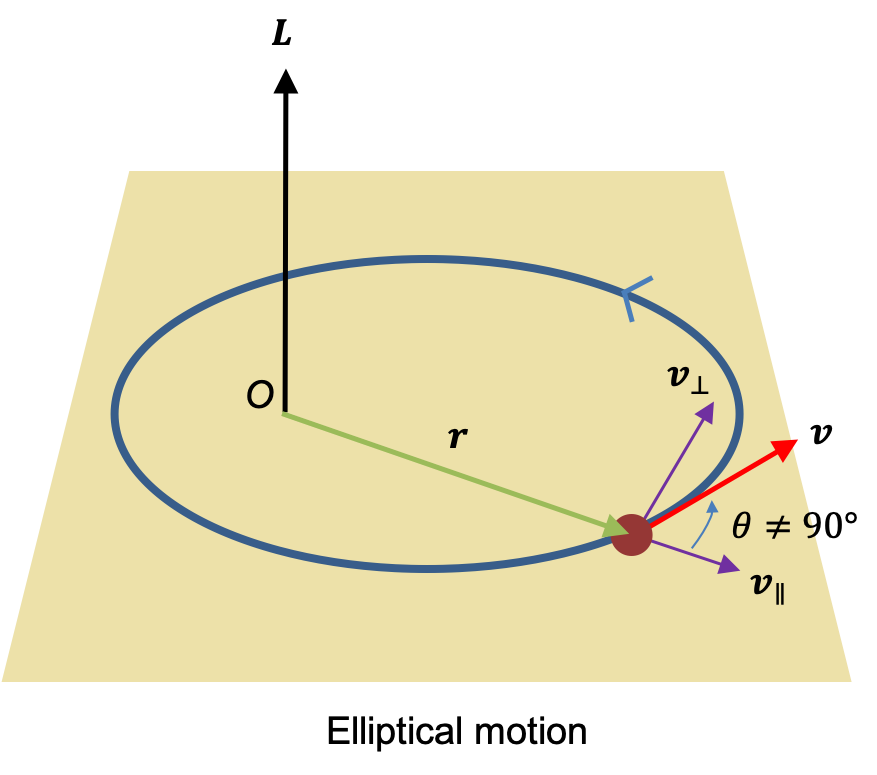

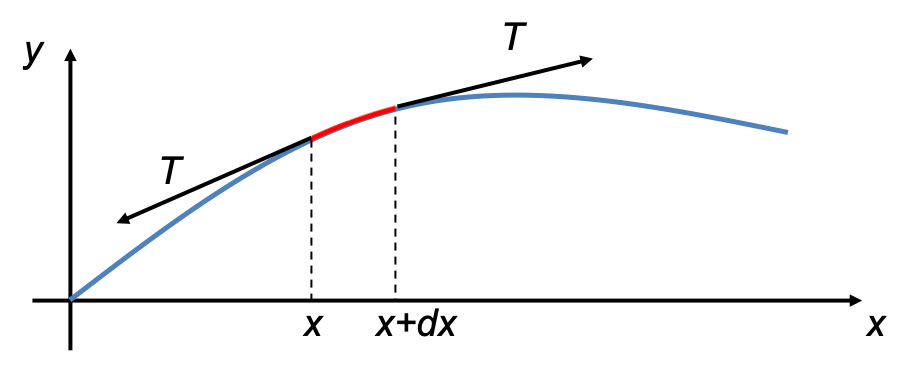

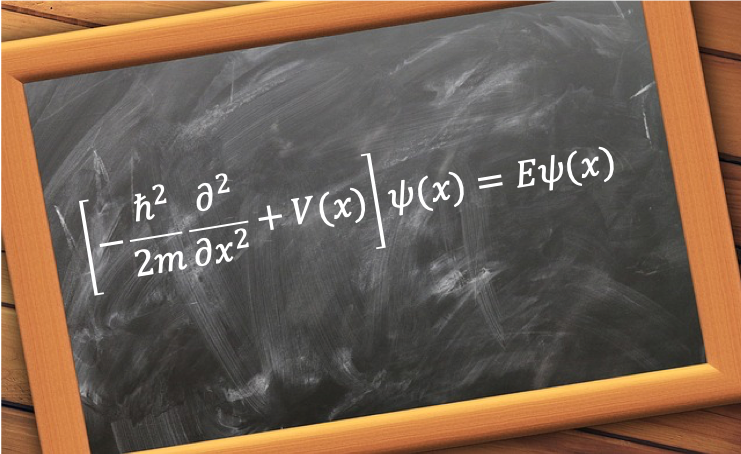

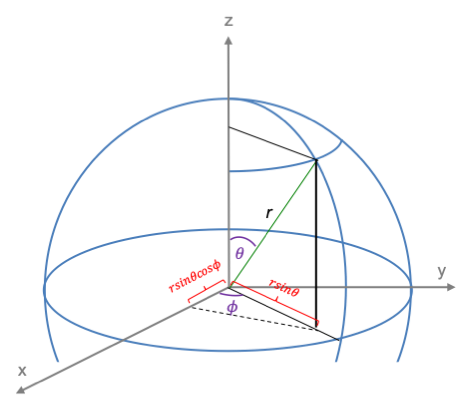

The quantum orbital angular momentum operators in spherical coordinates are derived using the following diagram:

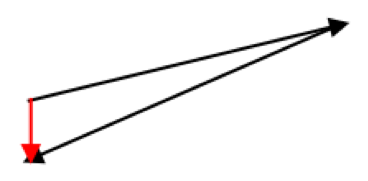

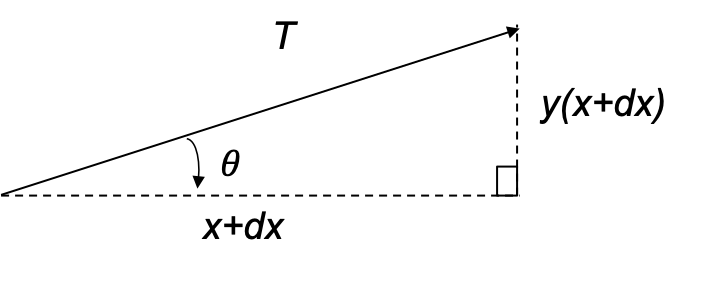

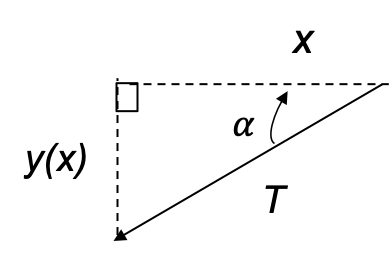

where

Therefore,

Similarly,

Furthermore, by differentiating implicitly with respect to

and separately with respect to

, and rearranging, we have

Question

Show that ,

and

.

Answer

To find expressions for and

, we let

for the first three equations of eq77, which gives us

,

and

. So,

Substituting these two expressions back into either the first or second equation of eq77, we have

Implicit differentiation of ,

and

with respect to

,

and

respectively gives

Since is independent of

Applying the multivariable chain rule to , we have:

Substitute i) eq78, eq81 and eq84 in eq87, ii) eq79, eq82 and eq85 in eq88 and iii) eq80, eq83 and eq86 in eq89, we have

respectively.

Substitute eq77, eq90, eq91 and eq92 in eq72, eq73 and eq74, we have

respectively.

Substitute eq93, eq94 and eq95 in eq75, we have, with some algebra

or equivalently

is the quantum orbital angular momentum operator and each of its eigenvalues is the square of the orbital angular momentum of an electron.

Question

Show that eq76 is in spherical coordinates.

Answer

Substitute eq93, eq94, eq95 and unit vectors in spherical coordinates ,

and

in eq76. we have, after some algebra, we have

Question

Show that .

Answer

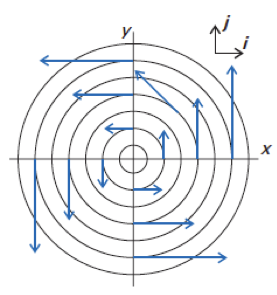

Substituting eq97 in and using

,

,

and

, we have